Medical AI Models

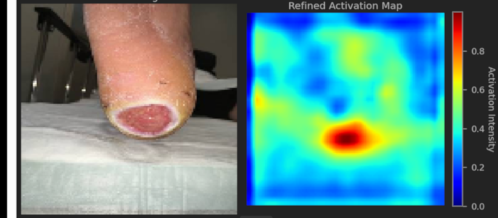

Vision Transformers for Wound Detection

Our advanced vision transformer models automatically identify and classify wounds from medical images. They assist healthcare professionals in prioritizing patients and recommending timely treatment, even in resource-limited settings. Our advanced vision transformer models automatically identify and classify wounds from medical images. They assist healthcare professionals in prioritizing patients and recommending timely treatment, even in resource-limited settings.

On-the-Go Healthcare

Mobile Application for Wound Diagnosis

This smartphone app leverages AI to provide rapid wound assessments and preliminary treatment suggestions. Users simply upload photos, helping clinicians or caregivers expedite decisions and reduce complications. This smartphone app leverages AI to provide rapid wound assessments and preliminary treatment suggestions. Users simply upload photos, helping clinicians or caregivers expedite decisions and reduce complications.

Autonomous Drones

AI for UAV Systems

Our lightweight models enable drones to navigate autonomously, perform reconnaissance, or deliver supplies with minimal human input. Ideal for agriculture, security, search-and-rescue, and more. Our lightweight models enable drones to navigate autonomously, perform reconnaissance, or deliver supplies with minimal human input. Ideal for agriculture, security, search-and-rescue, and more.

Next-Gen Prosthetics

AI-Enhanced Prosthetic Limbs

Our innovative prosthetic limbs combine neural networks with modular 3D-printed parts. They adapt in real-time to user movements, offering intuitive control and energy efficiency—especially crucial in conflict-affected regions. Our innovative prosthetic limbs combine neural networks with modular 3D-printed parts. They adapt in real-time to user movements, offering intuitive control and energy efficiency—especially crucial in conflict-affected regions.

Personalized Suggestions

Recommender Systems

Our recommendation engines use real-time analytics to match products or content with each user's unique preferences. E-commerce sites, streaming services, and training platforms can all boost satisfaction and engagement. Our recommendation engines use real-time analytics to match products or content with each user's unique preferences. E-commerce sites, streaming services, and training platforms can all boost satisfaction and engagement.

Predictive Insights

Forecasting and Analytics Tools

We develop robust analytics dashboards for sales, operations, and resource management. By leveraging advanced time-series algorithms, businesses can anticipate trends, avoid bottlenecks, and allocate resources more efficiently. We develop robust analytics dashboards for sales, operations, and resource management. By leveraging advanced time-series algorithms, businesses can anticipate trends, avoid bottlenecks, and allocate resources more efficiently.

Eigenmode Decomposition

Dynamic Mode Decomposition for El Niño Discovering

DMD provides a way to identify and isolate the dynamic structures that are most important in the evolution of the system over time. In DMD, the modes are determined from the eigenvectors of a matrix that approximates the linear operator governing the evolution of the system. These modes, called DMD modes, represent coherent structures in the flow that grow, decay, or oscillate at a fixed frequency. Each mode is associated with a specific frequency and growth or decay rate, which are given by the eigenvalues of the same matrix.

Eigenmode Decomposition

Forward and Inverse PINN for the heat equation.

The blog post meticulously explores the utilization of Physics-Informed Neural Networks (PINNs) in solving forward and inverse problems related to the heat equation. It emphasizes the significant enhancement in training efficiency achieved through the integration of Residual Networks (ResNets) and Fourier features. The post candidly discusses the challenges encountered with non-homogeneous solutions and the potential of a well-structured Neural Network architecture in overcoming these hurdles. Special attention is given to the tune_beta parameter in optimizing deep learning architectures. The incorporation of Fourier features is shown to significantly bolster the expressive capability of the network

Neural Networks (Pytorch)

Adversarial Attacks on CIFAR-10

Adversarial attacks on neural networks were developed by Goodfellow et al. as techniques to fool models through perturbations applied to input data. As a result, the accuracy of the trained model can drop to almost zero. At the same time, perturbed inputs, e.g. images, are imperceptibly different to humans or AI solutions for self-driving cars, the military, or medicine. In this post, I set up a trained model on CIFAR-10 and demonstrate how applied perturbations shift classifications to the false negatives, which can be easily visualised through confusion matrices.

Option Pricing (Python)

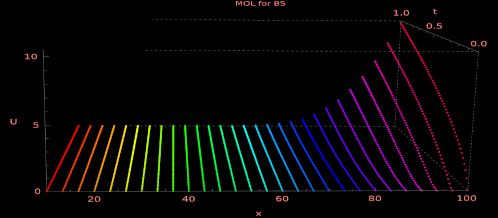

Explicit Method of Lines for European Option

Method of Lines (MOL) is a semi-analytical approach to solve PDEs where space dimension is discretised but time stays continuous. As a result, there is a system of ODEs coupled by the matrix operator, which is solved along the time. MOL seems to be good to price options because it decreases the error of estimation. The method is accurate, relatively fast and in general reliable. Nevertheless, the stability of the explicit scheme still depends upon space-grid discretisation.

Option Pricing (Python)

Implicit Method of Lines for European Option

In this post, I present MOL for the EU option. This time it is the implicit method, which is unconditionally stable and allows for more accurate price approximation.

Universal Approximation (Pytorch)

Physics-Informed Neural Network

In Physic-Informed Learning a neural network is trained by means of the inherent physical laws ancoded a priori. As a result, we obtain data-efficient approximators able to reconstruct physical states. This post shows how Neural Network approximates the solution of the PDE Heat Equation, Utt - c2Uxx = 0 , by minimising the loss function made up of the physist cost. i.e., residual of the PDE equation with some (not many) points in the interior and the boundary of the space-time domain. The physist cost works as a regulalizator for the suprvised boundary cost. Namely, we deal with the forward problem here.

Linear Algebra in Machine Learning (Python/Numpy)

Singualar Value Decomposition (SVD) in Machine Learning

SVD decomposition is the background for many ML algorithms such as PCA, Dimensionality Reduction (e.g. image compression), denoising data, linear autoencoders or matrix completion. Imagine, we want to compress a data matrix X from dimension s to 0 < k < s. Namely, we approximate matrix X ∈ ℝs × n by SVD matrix factorisation subject to rank(R) = k such that $$\underset{U_k,U_k^T}{\min } \left\| X-X U_k^T U_k \right\| _{\text{Fro}}^2=\underset{\left\{\sigma _j\right\}_{j=1}^{k < \min (s,n)}}{\min }\left\| X-\underbrace{\text{U$\Sigma $}_k V^T}_{R}\right\| _{\text{Fro}}^2$$ Understanding SVD will be necessary for the later post of robust PCA and matrix completion.

Linear Algebra in Machine Learning (Python/Numpy)

Robust PCA

Singular value decomposition (SVD) and principal component analysis (PCA) are powerful tools for finding low-rank representations of data matrices. However, many data matrices are only approximately low-rank, e.g., due to measurement errors and artefacts of other non-linear influences on the data acquisition process. Suppose we are given a large data matrix X ∈ ℝn × s and know it might be decomposed as X = L + S , where L ∈ ℝn × s has low-rank and S ∈ ℝn × s is sparse. The aim is to recover approximated matrix through recovering low-rank, L̂, and sparse components, Ŝ, by solving convex optimisation problem as follows $$\left(\hat{L},\hat{S}\right)=\,\,\, \underset{L\in \mathbb{R}^{n\times s},S\in \mathbb{R}^{n\times s}}{\arg \min } \left\{\| S\| _1+\alpha \| L\|_*\,\, \text{subject}\,\, \text{to}\,\, X=L+S\right\}$$ In this post, we will discuss a PCA-variant that is robust towards outliers in the data. Before I introduce the method, I want to reformulate the problem of finding low-rank approximations first.

Cryptocurrencies with Machine Learning

Market Capitalization of cryptocurrencies with Pandas and Plotly

The first part of the series of application of Machine Leanong to financial data. here I focus on usage Pandas and show the routines of Plotly to make effective responsive visualisations.

Functional Analysis

Solutions from Introductory Functional Analysis by Kreyszig

There are solutions of functional analysis from great Kreyszig book. These exercises are indespensible minimum for machine learning engineer. If you spot a mistake or know better approach to any problem, I am happy to know.